-

Bug

-

Resolution: Fixed

-

Blocker

-

None

-

Jenkins version: 2.107.1

Plugin version: 1.5

Kubernetes version: Server Version: version.Info{Major:"1", Minor:"9+", GitVersion:"v1.9.6-gke.0", GitCommit:"cb151369f60073317da686a6ce7de36abe2bda8d", GitTreeState:"clean", BuildDate:"2018-03-21T19:01:20Z", GoVersion:"go1.9.3b4", Compiler:"gc", Platform:"linux/amd64"}

Jenkins version: 2.107.1 Plugin version: 1.5 Kubernetes version: Server Version: version.Info{Major:"1", Minor:"9+", GitVersion:"v1.9.6-gke.0", GitCommit:"cb151369f60073317da686a6ce7de36abe2bda8d", GitTreeState:"clean", BuildDate:"2018-03-21T19:01:20Z", GoVersion:"go1.9.3b4", Compiler:"gc", Platform:"linux/amd64"}

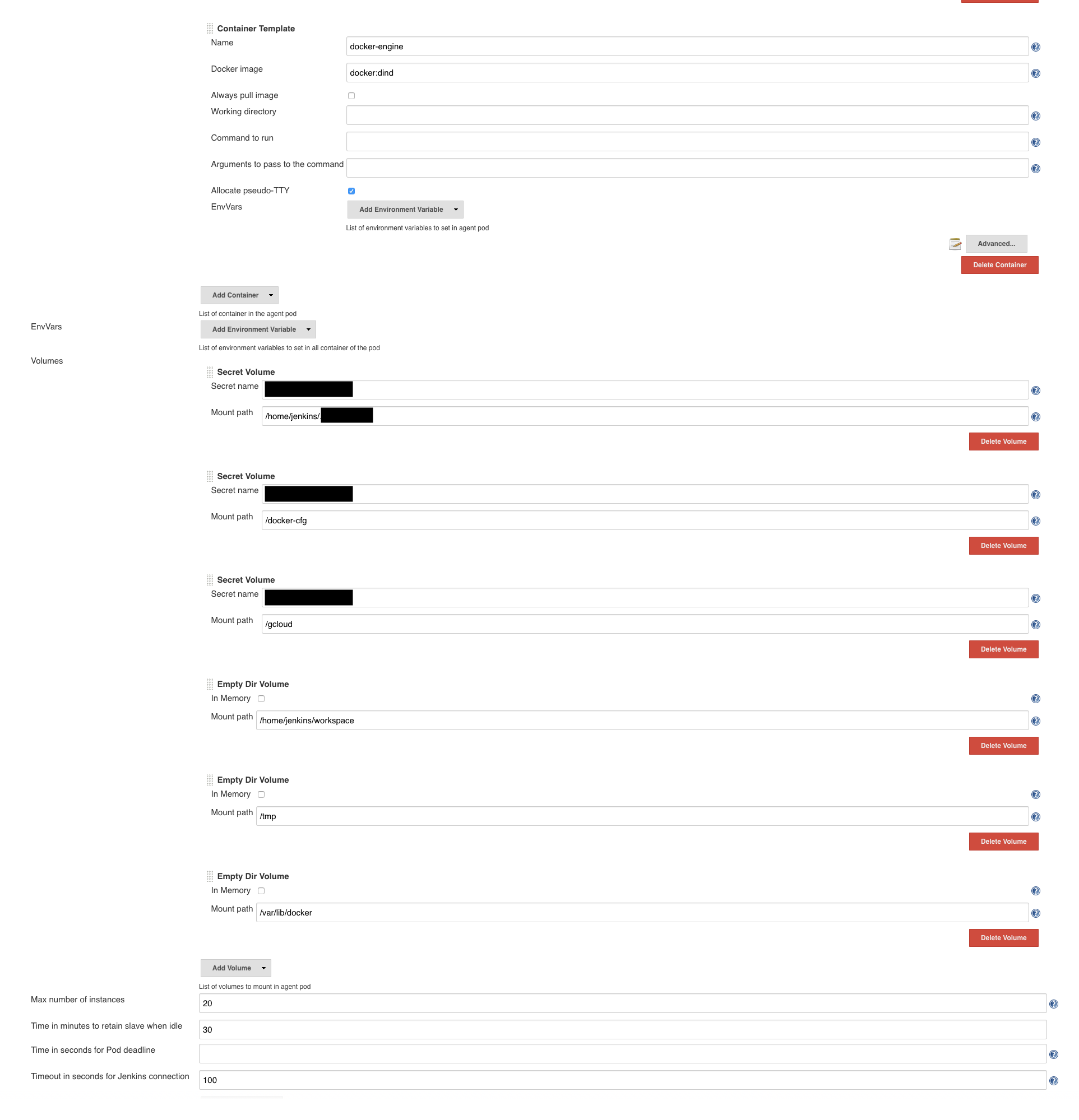

I have a Jenkins instance deployed via Helm on Friday that was spinning up pods just fine, and came back Monday to a system that couldn't start any. Configuration below:

The logs look like lots of this:

{{Apr 02, 2018 10:52:26 PM org.csanchez.jenkins.plugins.kubernetes.KubernetesSlave _terminate}}

{{INFO: Terminating Kubernetes instance for agent standard-1-r0fd4}}

{{Apr 02, 2018 10:52:26 PM okhttp3.internal.platform.Platform log}}

{{INFO: ALPN callback dropped: HTTP/2 is disabled. Is alpn-boot on the boot class path?}}

{{Apr 02, 2018 10:52:26 PM org.csanchez.jenkins.plugins.kubernetes.KubernetesLauncher launch}}

{{WARNING: Error in provisioning; agent=KubernetesSlave name: standard-1-rxq2t, template=PodTemplate\{inheritFrom='', name='standard-1', namespace='', instanceCap=20, idleMinutes=30, label='standard-1 worker', nodeSelector='', nodeUsageMode=NORMAL, customWorkspaceVolumeEnabled=true, workspaceVolume=org.csanchez.jenkins.plugins.kubernetes.volumes.workspace.EmptyDirWorkspaceVolume@565b8276, volumes=[org.csanchez.jenkins.plugins.kubernetes.volumes.SecretVolume@20a0b5ee, org.csanchez.jenkins.plugins.kubernetes.volumes.SecretVolume@4bc6165a, org.csanchez.jenkins.plugins.kubernetes.volumes.SecretVolume@41046566, org.csanchez.jenkins.plugins.kubernetes.volumes.EmptyDirVolume@372e4c46, org.csanchez.jenkins.plugins.kubernetes.volumes.EmptyDirVolume@12049088, org.csanchez.jenkins.plugins.kubernetes.volumes.EmptyDirVolume@690b3054], containers=[ContainerTemplate\{name='jnlp', image='gcr.io/myproj/cyrusbio-jnlp-minion:master', alwaysPullImage=true, workingDir='/home/jenkins', command='', args='', ttyEnabled=true, resourceRequestCpu='', resourceRequestMemory='', resourceLimitCpu='', resourceLimitMemory='', envVars=[KeyValueEnvVar [getValue()=tcp://127.0.0.1:2375, getKey()=DOCKER_HOST], KeyValueEnvVar [getValue()=/gcloud/credentials.json, getKey()=GOOGLE_APPLICATION_CREDENTIALS], KeyValueEnvVar [getValue()=/gcloud/credentials.json, getKey()=CLOUDSDK_AUTH_CREDENTIAL_FILE_OVERRIDE]], livenessProbe=org.csanchez.jenkins.plugins.kubernetes.ContainerLivenessProbe@3c38cba6}, ContainerTemplate\{name='docker-engine', image='docker:dind', privileged=true, workingDir='', command='', args='', ttyEnabled=true, resourceRequestCpu='1', resourceRequestMemory='2Gi', resourceLimitCpu='2100m', resourceLimitMemory='3Gi', livenessProbe=org.csanchez.jenkins.plugins.kubernetes.ContainerLivenessProbe@b54246a}]}}}

{{io.fabric8.kubernetes.client.KubernetesClientException: Failure executing: POST at: https://kubernetes.default/api/v1/namespaces/default/pods. Message: Pod "standard-1-rxq2t" is invalid: spec.containers[0].volumeMounts[6].mountPath: Required value. Received status: Status(apiVersion=v1, code=422, details=StatusDetails(causes=[StatusCause(field=spec.containers[0].volumeMounts[6].mountPath, message=Required value, reason=FieldValueRequired, additionalProperties=\{})], group=null, kind=Pod, name=standard-1-rxq2t, retryAfterSeconds=null, uid=null, additionalProperties=\{}), kind=Status, message=Pod "standard-1-rxq2t" is invalid: spec.containers[0].volumeMounts[6].mountPath: Required value, metadata=ListMeta(resourceVersion=null, selfLink=null, additionalProperties=\{}), reason=Invalid, status=Failure, additionalProperties=\{}).}}

{{at io.fabric8.kubernetes.client.dsl.base.OperationSupport.requestFailure(OperationSupport.java:472)}}

{{at io.fabric8.kubernetes.client.dsl.base.OperationSupport.assertResponseCode(OperationSupport.java:411)}}

{{at io.fabric8.kubernetes.client.dsl.base.OperationSupport.handleResponse(OperationSupport.java:381)}}

{{at io.fabric8.kubernetes.client.dsl.base.OperationSupport.handleResponse(OperationSupport.java:344)}}

{{at io.fabric8.kubernetes.client.dsl.base.OperationSupport.handleCreate(OperationSupport.java:227)}}

{{at io.fabric8.kubernetes.client.dsl.base.BaseOperation.handleCreate(BaseOperation.java:756)}}

{{at io.fabric8.kubernetes.client.dsl.base.BaseOperation.create(BaseOperation.java:334)}}

{{at org.csanchez.jenkins.plugins.kubernetes.KubernetesLauncher.launch(KubernetesLauncher.java:105)}}

{{at hudson.slaves.SlaveComputer$1.call(SlaveComputer.java:288)}}

{{at jenkins.util.ContextResettingExecutorService$2.call(ContextResettingExecutorService.java:46)}}

{{at java.util.concurrent.FutureTask.run(FutureTask.java:266)}}

{{at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)}}

{{at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)}}

{{at java.lang.Thread.run(Thread.java:748)}}{{Apr 02, 2018 10:52:26 PM org.csanchez.jenkins.plugins.kubernetes.KubernetesSlave _terminate}}

{{INFO: Terminating Kubernetes instance for agent standard-1-rxq2t}}

{{Apr 02, 2018 10:52:26 PM okhttp3.internal.platform.Platform log}}

{{INFO: ALPN callback dropped: HTTP/2 is disabled. Is alpn-boot on the boot class path?}}

{{Apr 02, 2018 10:52:26 PM org.csanchez.jenkins.plugins.kubernetes.KubernetesLauncher launch}}

{{WARNING: Error in provisioning; agent=KubernetesSlave name: standard-1-2btdk, template=PodTemplate\{inheritFrom='', name='standard-1', namespace='', instanceCap=20, idleMinutes=30, label='standard-1 worker', nodeSelector='', nodeUsageMode=NORMAL, customWorkspaceVolumeEnabled=true, workspaceVolume=org.csanchez.jenkins.plugins.kubernetes.volumes.workspace.EmptyDirWorkspaceVolume@565b8276, volumes=[org.csanchez.jenkins.plugins.kubernetes.volumes.SecretVolume@20a0b5ee, org.csanchez.jenkins.plugins.kubernetes.volumes.SecretVolume@4bc6165a, org.csanchez.jenkins.plugins.kubernetes.volumes.SecretVolume@41046566, org.csanchez.jenkins.plugins.kubernetes.volumes.EmptyDirVolume@372e4c46, org.csanchez.jenkins.plugins.kubernetes.volumes.EmptyDirVolume@12049088, org.csanchez.jenkins.plugins.kubernetes.volumes.EmptyDirVolume@690b3054], containers=[ContainerTemplate\{name='jnlp', image='gcr.io/myproj/cyrusbio-jnlp-minion:master', alwaysPullImage=true, workingDir='/home/jenkins', command='', args='', ttyEnabled=true, resourceRequestCpu='', resourceRequestMemory='', resourceLimitCpu='', resourceLimitMemory='', envVars=[KeyValueEnvVar [getValue()=tcp://127.0.0.1:2375, getKey()=DOCKER_HOST], KeyValueEnvVar [getValue()=/gcloud/credentials.json, getKey()=GOOGLE_APPLICATION_CREDENTIALS], KeyValueEnvVar [getValue()=/gcloud/credentials.json, getKey()=CLOUDSDK_AUTH_CREDENTIAL_FILE_OVERRIDE]], livenessProbe=org.csanchez.jenkins.plugins.kubernetes.ContainerLivenessProbe@3c38cba6}, ContainerTemplate\{name='docker-engine', image='docker:dind', privileged=true, workingDir='', command='', args='', ttyEnabled=true, resourceRequestCpu='1', resourceRequestMemory='2Gi', resourceLimitCpu='2100m', resourceLimitMemory='3Gi', livenessProbe=org.csanchez.jenkins.plugins.kubernetes.ContainerLivenessProbe@b54246a}]}}}

{{io.fabric8.kubernetes.client.KubernetesClientException: Failure executing: POST at: https://kubernetes.default/api/v1/namespaces/default/pods. Message: Pod "standard-1-2btdk" is invalid: spec.containers[0].volumeMounts[6].mountPath: Required value. Received status: Status(apiVersion=v1, code=422, details=StatusDetails(causes=[StatusCause(field=spec.containers[0].volumeMounts[6].mountPath, message=Required value, reason=FieldValueRequired, additionalProperties=\{})], group=null, kind=Pod, name=standard-1-2btdk, retryAfterSeconds=null, uid=null, additionalProperties=\{}), kind=Status, message=Pod "standard-1-2btdk" is invalid: spec.containers[0].volumeMounts[6].mountPath: Required value, metadata=ListMeta(resourceVersion=null, selfLink=null, additionalProperties=\{}), reason=Invalid, status=Failure, additionalProperties=\{}).}}

{{at io.fabric8.kubernetes.client.dsl.base.OperationSupport.requestFailure(OperationSupport.java:472)}}

{{at io.fabric8.kubernetes.client.dsl.base.OperationSupport.assertResponseCode(OperationSupport.java:411)}}

{{at io.fabric8.kubernetes.client.dsl.base.OperationSupport.handleResponse(OperationSupport.java:381)}}

{{at io.fabric8.kubernetes.client.dsl.base.OperationSupport.handleResponse(OperationSupport.java:344)}}

{{at io.fabric8.kubernetes.client.dsl.base.OperationSupport.handleCreate(OperationSupport.java:227)}}

{{at io.fabric8.kubernetes.client.dsl.base.BaseOperation.handleCreate(BaseOperation.java:756)}}

{{at io.fabric8.kubernetes.client.dsl.base.BaseOperation.create(BaseOperation.java:334)}}

{{at org.csanchez.jenkins.plugins.kubernetes.KubernetesLauncher.launch(KubernetesLauncher.java:105)}}

{{at hudson.slaves.SlaveComputer$1.call(SlaveComputer.java:288)}}

{{at jenkins.util.ContextResettingExecutorService$2.call(ContextResettingExecutorService.java:46)}}

{{at java.util.concurrent.FutureTask.run(FutureTask.java:266)}}

{{at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)}}

{{at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)}}

{{at java.lang.Thread.run(Thread.java:748)}}{{Apr 02, 2018 10:52:26 PM org.csanchez.jenkins.plugins.kubernetes.KubernetesSlave _terminate}}

Here's the pod template from my config.xml:

{{<org.csanchez.jenkins.plugins.kubernetes.PodTemplate>}}

{{ <inheritFrom></inheritFrom>}}

{{ <name>standard-1</name>}}

{{ <namespace></namespace>}}

{{ <privileged>false</privileged>}}

{{ <alwaysPullImage>false</alwaysPullImage>}}

{{ <instanceCap>20</instanceCap>}}

{{ <slaveConnectTimeout>100</slaveConnectTimeout>}}

{{ <idleMinutes>30</idleMinutes>}}

{{ <activeDeadlineSeconds>0</activeDeadlineSeconds>}}

{{ <label>standard-1 worker</label>}}

{{ <nodeSelector></nodeSelector>}}

{{ <nodeUsageMode>NORMAL</nodeUsageMode>}}

{{ <customWorkspaceVolumeEnabled>true</customWorkspaceVolumeEnabled>}}

{{ <workspaceVolume class="org.csanchez.jenkins.plugins.kubernetes.volumes.workspace.EmptyDirWorkspaceVolume">}}

{{ <memory>false</memory>}}

{{ </workspaceVolume>}}

{{ <volumes>}}

{{ <org.csanchez.jenkins.plugins.kubernetes.volumes.SecretVolume>}}

{{ <mountPath>/home/jenkins/XXXX</mountPath>}}

{{ <secretName>XXXXXXXXX</secretName>}}

{{ </org.csanchez.jenkins.plugins.kubernetes.volumes.SecretVolume>}}

{{ <org.csanchez.jenkins.plugins.kubernetes.volumes.SecretVolume>}}

{{ <mountPath>/docker-cfg</mountPath>}}

{{ <secretName>XXXXXXXXX</secretName>}}

{{ </org.csanchez.jenkins.plugins.kubernetes.volumes.SecretVolume>}}

{{ <org.csanchez.jenkins.plugins.kubernetes.volumes.SecretVolume>}}

{{ <mountPath>/gcloud</mountPath>}}

{{ <secretName>XXXXXXXXX</secretName>}}

{{ </org.csanchez.jenkins.plugins.kubernetes.volumes.SecretVolume>}}

{{ <org.csanchez.jenkins.plugins.kubernetes.volumes.EmptyDirVolume>}}

{{ <mountPath>/home/jenkins/workspace</mountPath>}}

{{ <memory>false</memory>}}

{{ </org.csanchez.jenkins.plugins.kubernetes.volumes.EmptyDirVolume>}}

{{ <org.csanchez.jenkins.plugins.kubernetes.volumes.EmptyDirVolume>}}

{{ <mountPath>/tmp</mountPath>}}

{{ <memory>false</memory>}}

{{ </org.csanchez.jenkins.plugins.kubernetes.volumes.EmptyDirVolume>}}

{{ <org.csanchez.jenkins.plugins.kubernetes.volumes.EmptyDirVolume>}}

{{ <mountPath>/var/lib/docker</mountPath>}}

{{ <memory>false</memory>}}

{{ </org.csanchez.jenkins.plugins.kubernetes.volumes.EmptyDirVolume>}}

{{ </volumes>}}

{{ <containers>}}

{{ <org.csanchez.jenkins.plugins.kubernetes.ContainerTemplate>}}

{{ <name>jnlp</name>}}

{{ <image>gcr.io/myproj/cyrusbio-jnlp-minion:master</image>}}

{{ <privileged>false</privileged>}}

{{ <alwaysPullImage>true</alwaysPullImage>}}

{{ <workingDir>/home/jenkins</workingDir>}}

{{ <command></command>}}

{{ <args></args>}}

{{ <ttyEnabled>true</ttyEnabled>}}

{{ <resourceRequestCpu></resourceRequestCpu>}}

{{ <resourceRequestMemory></resourceRequestMemory>}}

{{ <resourceLimitCpu></resourceLimitCpu>}}

{{ <resourceLimitMemory></resourceLimitMemory>}}

{{ <envVars>}}

{{ <org.csanchez.jenkins.plugins.kubernetes.model.KeyValueEnvVar>}}

{{ <key>DOCKER_HOST</key>}}

{{ <value>tcp://127.0.0.1:2375</value>}}

{{ </org.csanchez.jenkins.plugins.kubernetes.model.KeyValueEnvVar>}}

{{ <org.csanchez.jenkins.plugins.kubernetes.model.KeyValueEnvVar>}}

{{ <key>GOOGLE_APPLICATION_CREDENTIALS</key>}}

{{ <value>/gcloud/credentials.json</value>}}

{{ </org.csanchez.jenkins.plugins.kubernetes.model.KeyValueEnvVar>}}

{{ <org.csanchez.jenkins.plugins.kubernetes.model.KeyValueEnvVar>}}

{{ <key>CLOUDSDK_AUTH_CREDENTIAL_FILE_OVERRIDE</key>}}

{{ <value>/gcloud/credentials.json</value>}}

{{ </org.csanchez.jenkins.plugins.kubernetes.model.KeyValueEnvVar>}}

{{ </envVars>}}

{{ <ports/>}}

{{ <livenessProbe>}}

{{ <execArgs></execArgs>}}

{{ <timeoutSeconds>0</timeoutSeconds>}}

{{ <initialDelaySeconds>0</initialDelaySeconds>}}

{{ <failureThreshold>0</failureThreshold>}}

{{ <periodSeconds>0</periodSeconds>}}

{{ <successThreshold>0</successThreshold>}}

{{ </livenessProbe>}}

{{ </org.csanchez.jenkins.plugins.kubernetes.ContainerTemplate>}}

{{ <org.csanchez.jenkins.plugins.kubernetes.ContainerTemplate>}}

{{ <name>docker-engine</name>}}

{{ <image>docker:dind</image>}}

{{ <privileged>true</privileged>}}

{{ <alwaysPullImage>false</alwaysPullImage>}}

{{ <workingDir></workingDir>}}

{{ <command></command>}}

{{ <args></args>}}

{{ <ttyEnabled>true</ttyEnabled>}}

{{ <resourceRequestCpu>1</resourceRequestCpu>}}

{{ <resourceRequestMemory>2Gi</resourceRequestMemory>}}

{{ <resourceLimitCpu>2100m</resourceLimitCpu>}}

{{ <resourceLimitMemory>3Gi</resourceLimitMemory>}}

{{ <envVars/>}}

{{ <ports/>}}

{{ <livenessProbe>}}

{{ <execArgs></execArgs>}}

{{ <timeoutSeconds>0</timeoutSeconds>}}

{{ <initialDelaySeconds>0</initialDelaySeconds>}}

{{ <failureThreshold>0</failureThreshold>}}

{{ <periodSeconds>0</periodSeconds>}}

{{ <successThreshold>0</successThreshold>}}

{{ </livenessProbe>}}

{{ </org.csanchez.jenkins.plugins.kubernetes.ContainerTemplate>}}

{{ </containers>}}

{{ <envVars/>}}

{{ <annotations/>}}

{{ <imagePullSecrets/>}}

{{</org.csanchez.jenkins.plugins.kubernetes.PodTemplate>}}

- is duplicated by

-

JENKINS-50801 Cannot provision Slave Pod: mountPath: Required value

-

- Closed

-

- links to