-

Bug

-

Resolution: Fixed

-

Major

-

None

-

jenkins-core 2.205

workflow-durable-task-step 2.35

I build my own Jenkins image and check it's sanity by starting in in a docker container and trying to login to it. I achieve this using the following Jenkinsfile:

stages {

stage('Build Jenkins Master Image') {

steps {

sh(

script: """

cd Jenkins-Master

docker pull jenkins:latest

docker build --rm -t ${IMAGE_TAG} .

"""

)

}

}

stage('Image sanity check') {

steps {

withCredentials([string(credentialsId: 'CASC_VAULT_TOKEN', variable: 'CASC_VAULT_TOKEN'),

usernamePassword(credentialsId: 'Forge_service_account', passwordVariable: 'JENKINS_PASSWORD', usernameVariable: 'JENKINS_LOGIN')]) {

sh(

script: """

docker run -e CASC_VAULT_TOKEN=${CASC_VAULT_TOKEN} \

--name jenkins \

-d \

-p 8080:8080 ${IMAGE_TAG}

mvn -Djenkins.test.timeout=${GLOBAL_TEST_TIMEOUT} -B -f Jenkins-Master/pom.xml test

"""

)

}

}

}

The test is successful, but the build fails with the following log:

[2019-11-25T10:33:38.333Z] Nov 25, 2019 11:33:37 AM ch.ti8m.forge.jenkins.logintest.LocalhostJenkinsRule before [2019-11-25T10:33:38.333Z] INFO: Waiting for Jenkins instance... (response code 503) [2019-11-25T10:33:43.628Z] Nov 25, 2019 11:33:42 AM ch.ti8m.forge.jenkins.logintest.LocalhostJenkinsRule before [2019-11-25T10:33:43.628Z] INFO: Waiting for Jenkins instance... (response code 503) [Pipeline] } [Pipeline] // withCredentials [Pipeline] } [Pipeline] // withEnv [Pipeline] } [Pipeline] // stage [Pipeline] stage [Pipeline] { (Push Jenkins Master Image) Stage "Push Jenkins Master Image" skipped due to earlier failure(s) [Pipeline] } [Pipeline] // stage [Pipeline] } [Pipeline] // withEnv [Pipeline] } [Pipeline] // ansiColor [Pipeline] } [Pipeline] // timeout [Pipeline] } [Pipeline] // timestamps [Pipeline] } [Pipeline] // withEnv [Pipeline] } [Pipeline] // withEnv [Pipeline] } [Pipeline] // node [Pipeline] End of Pipeline ERROR: missing workspace /data/ci/workspace/orge_ti8m-ci-2.0_main-instance_8 on srvzh-jenkinsnode-tst-005 Finished: FAILURE

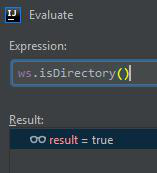

As I debug in workflow-durable-task-step I notice a strange behavior. My breakpoint is set to DurableTaskStep.java#L386, when it halts there, it means ws.isDirectory() returned false. But during this break in debugger I evaluate ws.isDirectory() manually and it returns true.

Any ideas what might cause this?