-

New Feature

-

Resolution: Fixed

-

Critical

-

None

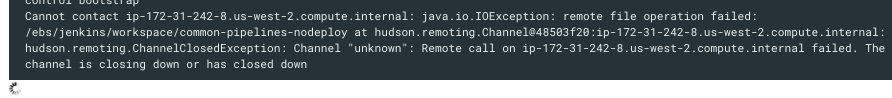

While my pipeline was running, the node that was executing logic terminated. I see this at the bottom of my console output:

Cannot contact ip-172-31-242-8.us-west-2.compute.internal: java.io.IOException: remote file operation failed: /ebs/jenkins/workspace/common-pipelines-nodeploy at hudson.remoting.Channel@48503f20:ip-172-31-242-8.us-west-2.compute.internal: hudson.remoting.ChannelClosedException: Channel "unknown": Remote call on ip-172-31-242-8.us-west-2.compute.internal failed. The channel is closing down or has closed down

There's a spinning arrow below it.

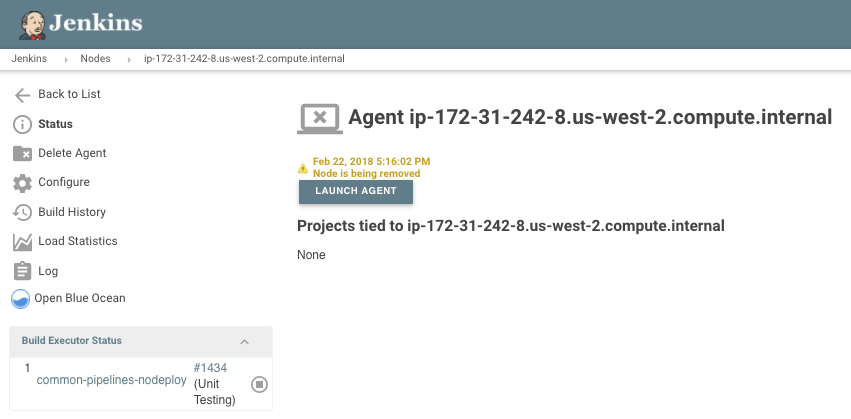

I have a cron script that uses the Jenkins master CLI to remove nodes which have stopped responding. When I examine this node's page in my Jenkins website, it looks like the node is still running that job and i see an orange label that says "Feb 22, 2018 5:16:02 PM Node is being removed".

I'm wondering what would be a better way to say "If the channel closes down, retry the work on another node with the same label?

Things seem stuck. Please advise.

- causes

-

JENKINS-73618 ws step re-provisions already provisioned workspace if controller restarted in midway during build

-

- Open

-

-

JENKINS-69936 PWD returning wrong path

-

- Resolved

-

-

JENKINS-70528 node / dir / node on same agent sets PWD to that of dir rather than @2 workspace

-

- Resolved

-

- depends on

-

JENKINS-30383 SynchronousNonBlockingStepExecution should allow restart of idempotent steps

-

- Resolved

-

- is duplicated by

-

JENKINS-49241 pipeline hangs if slave node momentarily disconnects

-

- Open

-

-

JENKINS-47868 Pipeline durability hang when slave node disconnected

-

- Reopened

-

-

JENKINS-43781 Quickly detecting and restarting a job if the job's slave disconnects

-

- Resolved

-

-

JENKINS-57675 Pipeline steps running forever when executor fails

-

- Resolved

-

-

JENKINS-47561 Pipelines wait indefinitely for kubernetes slaves to come back online

-

- Closed

-

-

JENKINS-43607 Jenkins pipeline not aborted when the machine running docker container goes offline

-

- Resolved

-

-

JENKINS-56673 Better handling of ChannelClosedException in Declarative pipeline

-

- Resolved

-

- is related to

-

JENKINS-41854 Contextualize a fresh FilePath after an agent reconnection

-

- Resolved

-

- relates to

-

JENKINS-36013 Automatically abort ExecutorPickle rehydration from an ephemeral node

-

- Closed

-

-

JENKINS-61387 SlaveComputer not cleaned up after the channel is closed

-

- Open

-

-

JENKINS-67285 if jenkins-agent pod has removed fail fast jobs that use this jenkins-agent pod

-

- Open

-

-

JENKINS-71113 AgentErrorCondition should handle "missing workspace" error

-

- Open

-

-

JENKINS-60507 Pipeline stuck when allocating machine | node block appears to be neither running nor scheduled

-

- Reopened

-

-

JENKINS-59340 Pipeline hangs when Agent pod is Terminated

-

- Resolved

-

-

JENKINS-35246 Kubernetes agents not getting deleted in Jenkins after pods are deleted

-

- Resolved

-

-

JENKINS-70333 Default for Declarative agent retries

-

- Open

-

-

JENKINS-68963 build logs should contain if a spot agent is terminated

-

- Open

-

- links to